A coalition of current and former employees from leading AI organizations, including OpenAI and Google DeepMind, has penned an open letter advocating for enhanced transparency and protections for whistleblowers in the artificial intelligence sector.

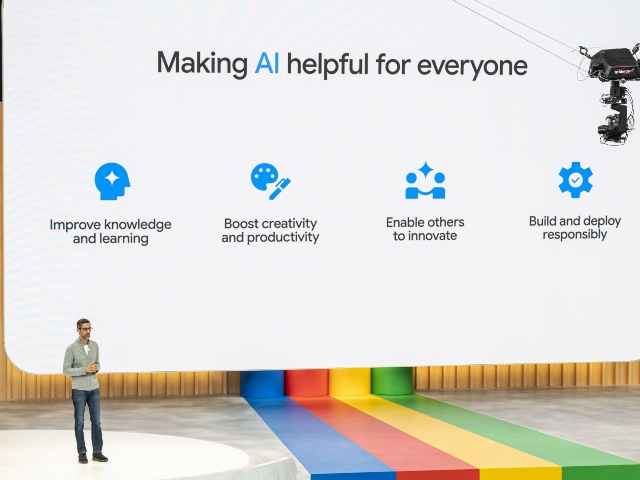

Sundar Pichai, chief executive officer of Alphabet Inc., during the Google I/O Developers Conference in Mountain View, California, US, on Wednesday, May 10, 2023. Photographer: David Paul Morris/Bloomberg

OpenAI chief Sam Altman looking lost (Mike Coppola/Getty)

The open letter advocates for a “right to warn about artificial intelligence” and seeks adherence to four principles focused on transparency and accountability. These principles stipulate that companies should not compel employees to sign non-disparagement agreements to avert discussions on risk-related AI issues, and should provide a channel for employees to anonymously report concerns to board members.

The signatories underscore their critical role in ensuring AI companies remain accountable to the public, especially given the insufficient government oversight. They contend that wide-ranging confidentiality agreements prevent them from voicing their concerns, other than to the companies that might neglect to address these issues.

In response, OpenAI stated that it offers various channels, such as a tipline, for reporting internal issues, and assured that new technology would only be released after implementing proper safeguards. However, this letter follows the recent departures of two senior OpenAI employees, co-founder Ilya Sutskever and key safety researcher Jan Leike. Leike claimed that OpenAI had shifted away from a focus on safety to prioritize “shiny products.”

Concerns about the possible risks of artificial intelligence have grown in recent years, with technological advancements outpacing regulatory responses. Although AI firms have publicly committed to the safe development of AI technologies, researchers and employees have sounded alarms regarding the inadequacy of oversight, which could exacerbate existing societal issues or introduce new problems.